Spreadsheet software lacks syntax highlighting, autocomplete, code browsing, and perhaps any other feature you might want out of a code editor. However, there is one aspect of programming in which LibreOffice Calc excels beyond its classical contemporaries.

In this thought experiment, allow me to show you what spreadsheets have to offer to the design of computer languages.

A different medium

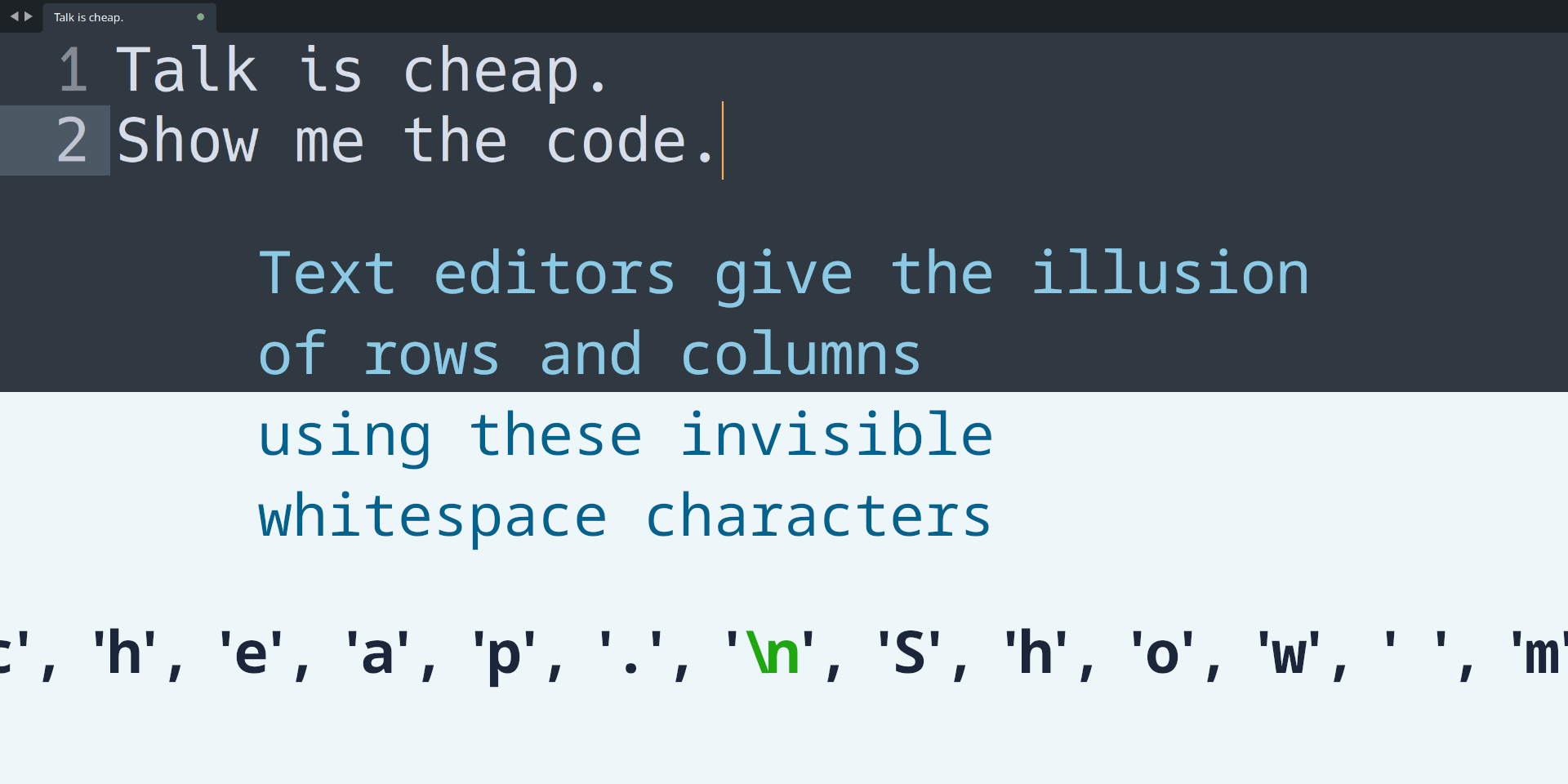

Plain-text1 is an unbroken sequence of symbols extending to infinity. It is purely one-dimensional. We are used to seeing rows and columns in our text files, but this is merely the perception our tools grant us for our convenience. The symbols are still arranged one after the other, in a single dimension.

Don’t think too much about the infinities here. We’re in the abstract world right now, and I’ve used abstract terms because I don’t want us to focus on memory limitations or the number of atoms in the universe.

I do want us to think about the data structures behind the user interfaces of these mediums.

In this case of plain-text, this is an array with one dimension where each element stores one character2. Unlike plain-text, the rows and columns in spreadsheets are very real.

For spreadsheets, a single cell is equivalent to a complete text file3. We have cells running along two axes, which adds two dimensions. A workbook can contain any number of spreadsheets, and this adds another dimension to our data structure.

Bounded tokens

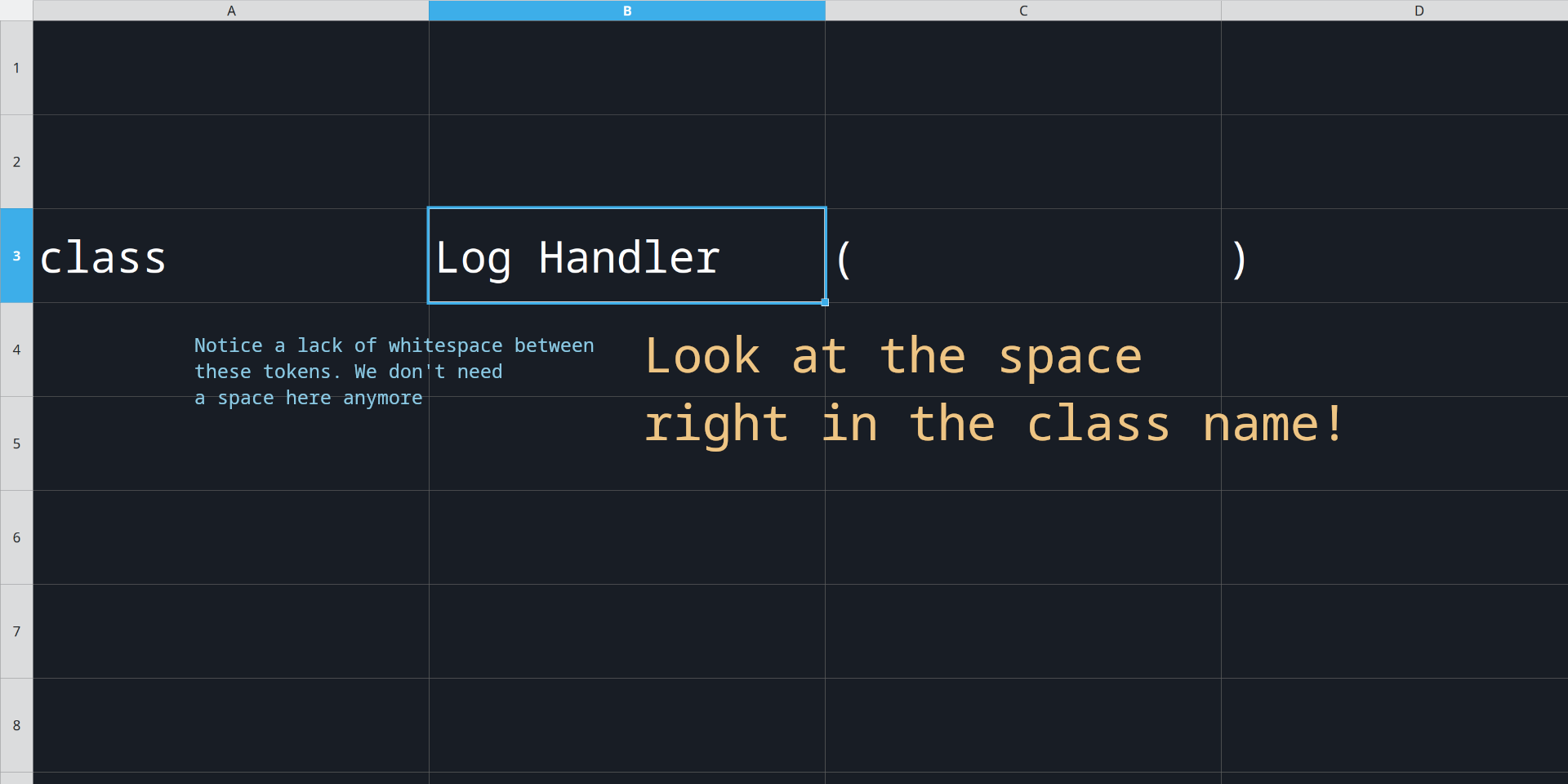

The first feature I want you to see is the natural boundary between cells. The contents of one cell are entirely enclosed within its walls, and these walls are granted to us by the medium.

A computer language designed for spreadsheets could exploit cells for storing individual tokens. A parser for this language can skip the tokenization phase completely because the tokens are already separated by cells! This is what gives Calc an edge over other code editors.

Text-file languages normally use whitespace as token separators. Since tokens are now separated by cell boundaries, we don’t need to assign any special significance in the language grammar to whitespace. Whitespace can now be a part of tokens without affecting the rest of the grammar.

Identity crisis

With whitespace losing its special status, language grammars can be simpler and more flexible. We can put spaces in our identifiers now. No more Snake-, Camel-, or Pascal-case. This is what we’ve always wanted. How far can this approach take us?

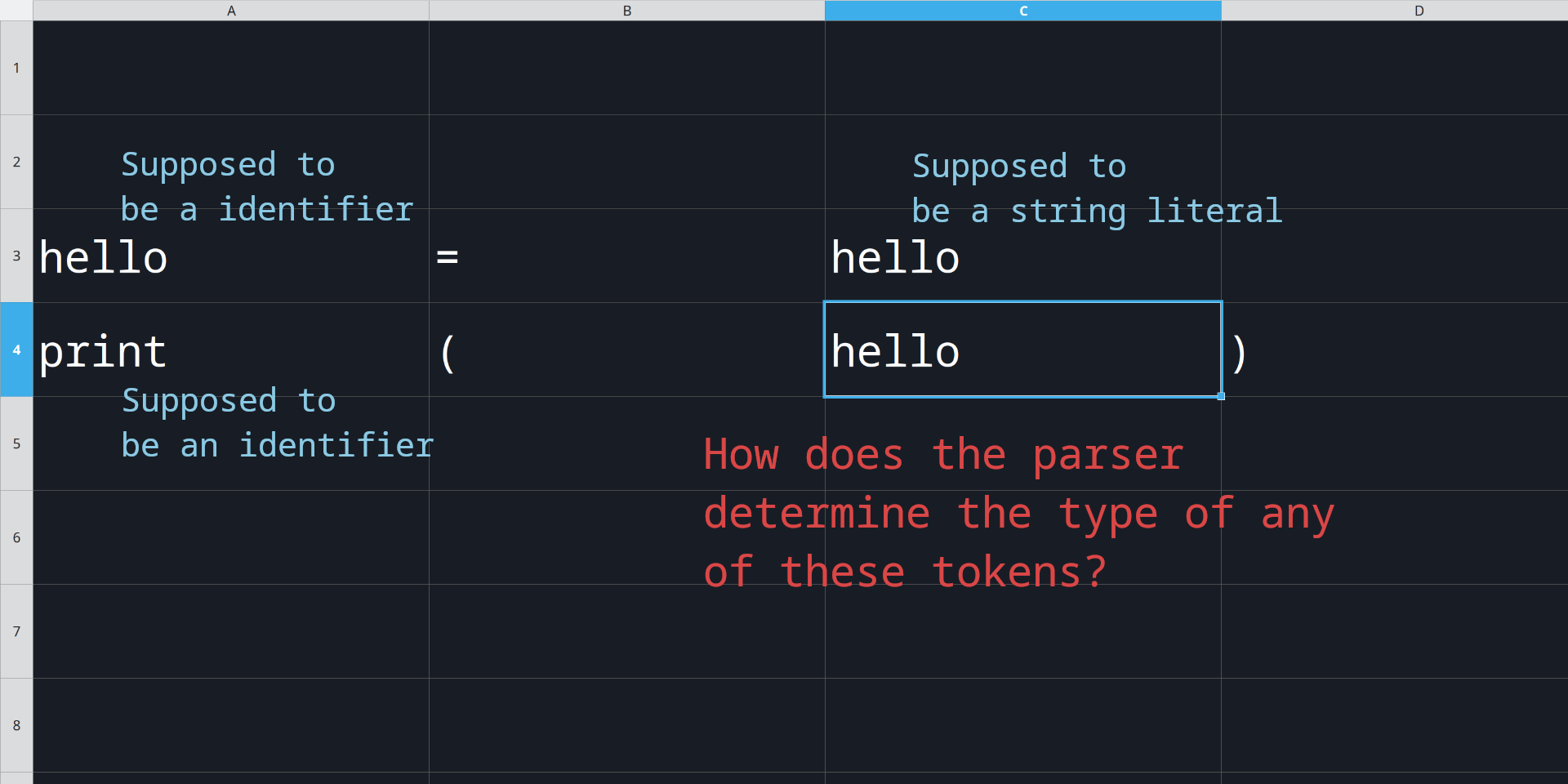

Let’s look at string literals.

In most computer languages, they are delimited by " or '. Now that

we have solid token boundaries, we don’t really need these anymore, right?

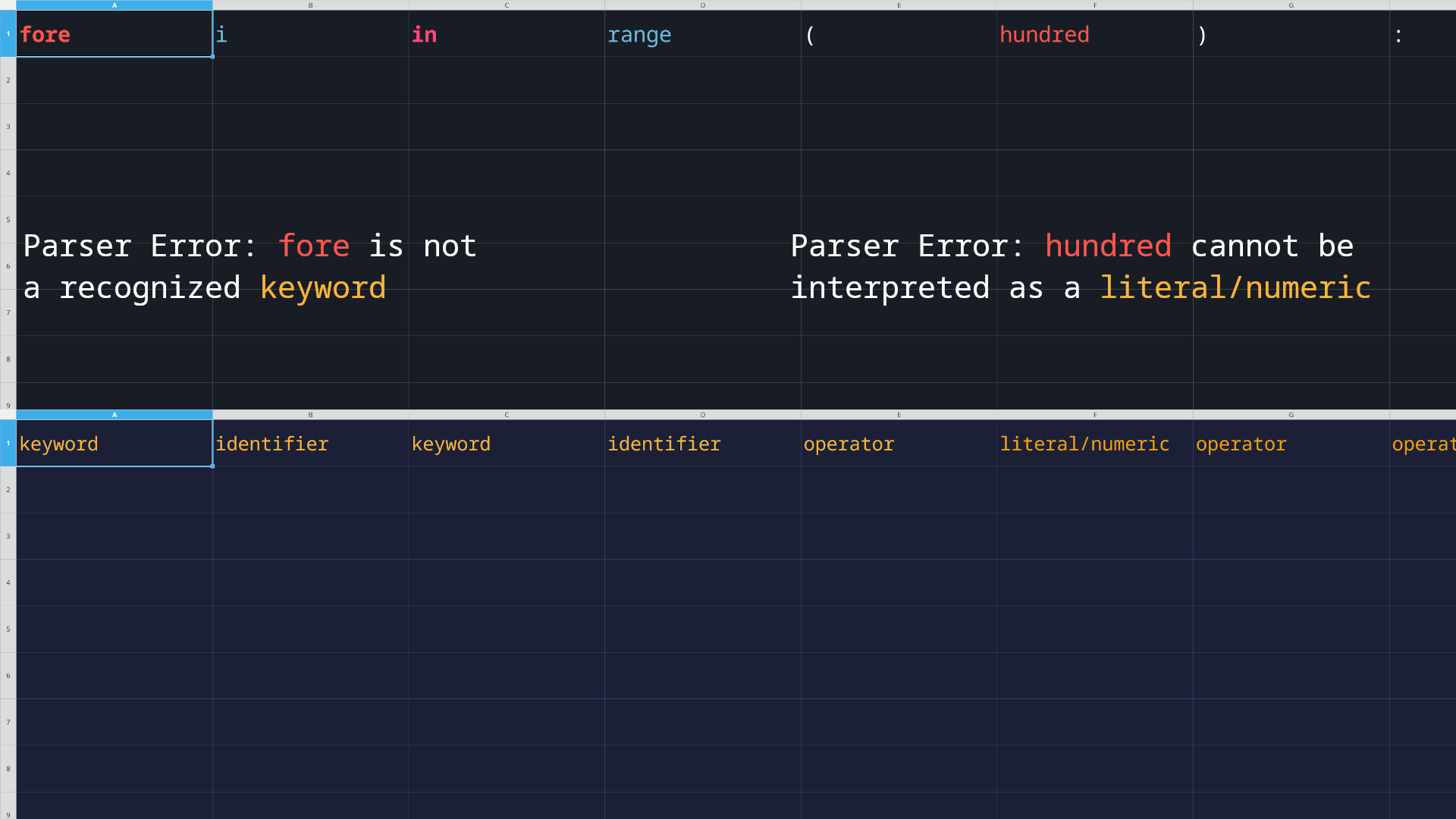

The string hello does not look any different from the variable hello.

How would the parser tell these apart?

There are more edge cases. A function named interrupt.handle4 is perfectly

valid because of token boundaries, but a function named .5 would collide with

the member access operator (.).

Furthermore, our parser can handle a variable called 2D Vector, but how would it

know that 404 is the name of a class and not a numeric literal?

There are plenty of these edge cases, and it’s worth solving them because we want to remove the ambiguity in our communication. There is an opportunity in this identity crisis. The user definitely knows how they want each token to be interpreted.

What if we don’t make the machine guess it with context clues?

Descriptive tokens

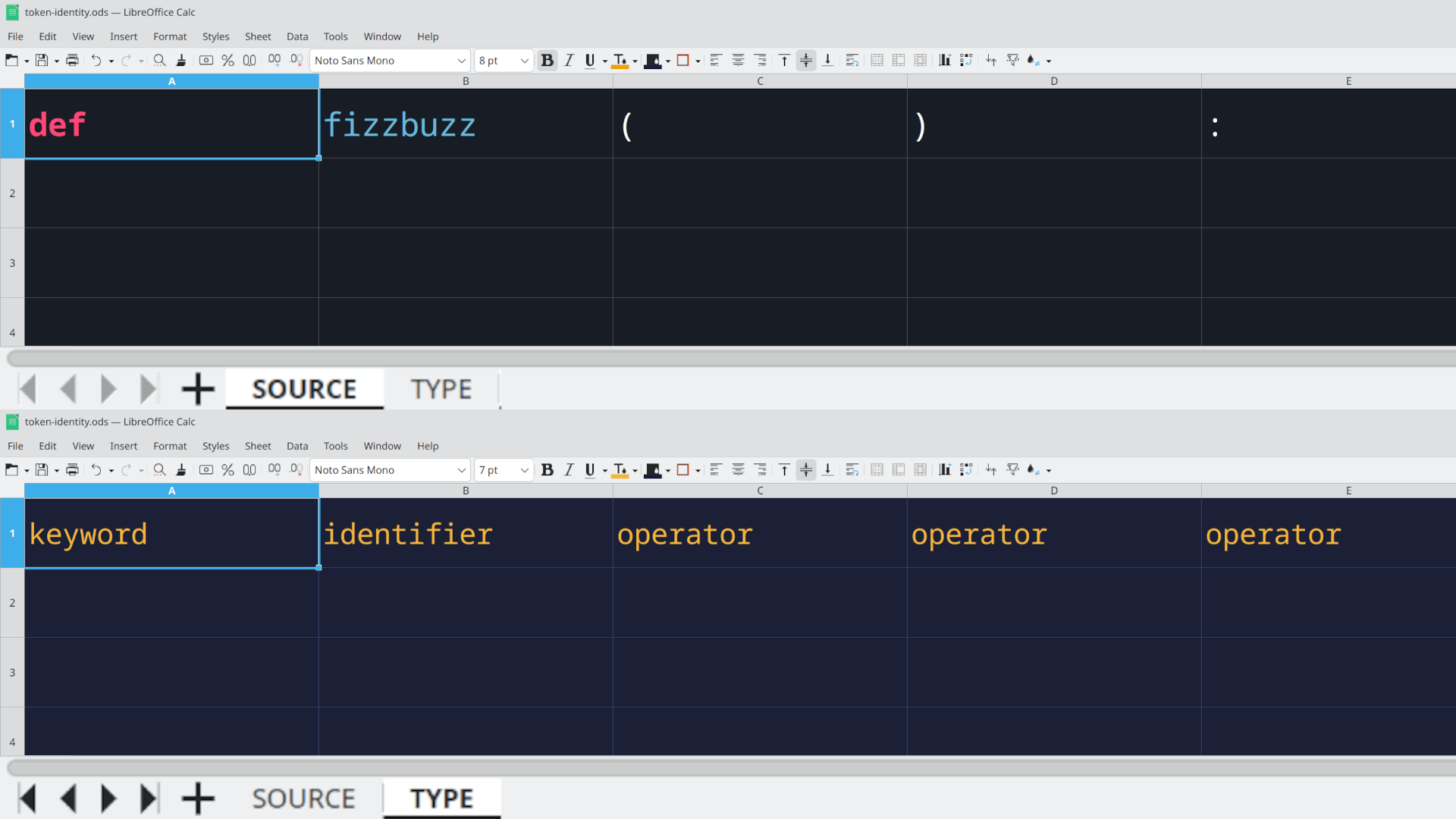

Our tokens reside in the default sheet. Let’s call it SOURCE. Now let’s create

a new sheet and call it TYPE. There is a one-to-one correspondence between the

cells in the two sheets. In other words, we can use the cell at (x, y) in TYPE to

provide some information about the token in SOURCE at the same coordinates.

Reminder that this is a thought experiment6.

This solves the token identity crisis entirely. For every token in the SOURCE

sheet, the user enters the type of the token in the corresponding cell in TYPE.

This is only possible because of the dimensionality of data in Calc.

The parser needs to simply perform a consistency check on the contents of the token and its type (like checking if the contents can be interpreted as a number, or if the keyword exists). This is a much easier problem to solve than the one of inferring token types using grammar rules.

Outcome

Bounded and descriptive tokens require a significant shift from our usual method of writing code. Users now need to do a lot of extra work to manually create token boundaries and describe the type of each token.

There is a solution to this problem, and it exists in the realm of user interfaces. We don’t need to talk about that here because our concern right now is the data structure behind the user interface.

Let’s look at what the new data structure does for us.

Range of communication

Spreadsheet tokens have no restrictions on their character set. A token can be written with any character that the encoding of its file will allow. There are no special characters anymore because the identity of tokens is not determined by the character set at all.

Consequently, spreadsheet tokens will never need to be escaped7. Absence of escape characters from the language is a win for user experience and the readability of code.

This allows users to be as expressive as they need to be. While limitations on tokens may still be necessary, these would relate to the semantics of the language rather than constraints of the communication protocol.

Design of parsers

Parser design can be simplified with the removal of lexers. A validation step may be required to ensure that token content can be mapped to the token type.

Language grammars can be simplified as well. Any rules that detect the token identity can be removed from the grammar entirely. Parsers also do not have to process escape characters anymore.

In short, parsers can focus on higher-level semantics of the language, without worrying about low-level details of communication.

No collisions ever

The effect of the contents of a spreadsheet token is confined to its boundaries. It cannot overflow into or change the meaning of other tokens. The contents of a spreadsheet token also can’t change the interpretation of the token itself because this is defined by its type in another channel.

Spreadsheet tokens are protected against collisions.

Spreadsheets aren’t the only way

A cell in a spreadsheet is analogous to an entire text file8. This means that text files can also be arranged in multi-dimensional arrays9 to get all the same characteristics that I have described for spreadsheets.

Text files have well-defined boundaries just like cells, and you can use them to separate tokens — provided you only store one token per file.

The key point here is that you only get the benefits of boundaries between tokens when you split them across multiple cells (or text files). These boundaries are medium-defined, whereas in the case of a single text file containing the whole program, a lexer or parser would detect boundaries using lexing or grammar rules.

Why not just store the entire abstract syntax tree, then?

I have suggested in this article that we should be storing tokens along with their types and boundaries. This essentially eliminates the lexing step for the parser. It is valid to ask if we can travel further down this route — storing more information in the source content and reducing the effort of the parser.

It is not hard to imagine how this might be useful — we’re trading CPU cycles for memory. If a file is parsed frequently enough, this might give us a massive performance increase. It would also simplify the design of the parsers. Parsers, instead of processing grammar rules could be simple tree traversers.

Performance aside, I don’t see any benefit to storing the abstract syntax tree10. Bounded, descriptive tokens create communication side channels with which (human) users can clearly encode their intent. This gives us a wider range of communication and protects us from collisions. Everything beyond this can be reconstructed, so I don’t see the advantage of storing abstract syntax trees.

Conclusion

Thank you for coming along with me on this massive hypothetical. This article has been on my mind for many years now, and I was so excited to post this that I haven’t even talked about some minor stuff (like null tokens).

I am sure you are not convinced yet.

I’m working on a larger idea about the separation of concerns in computer languages that will make a stronger case for what I have written here. See you in the next one!

Not to be confused with Plaintext (Cryptography) ↩︎

Symbol instead of character might be more accurate ↩︎

I know cells store rich-text, not plain-text, but let’s pretend otherwise for this article. ↩︎

No scope, that’s what the function is called. ↩︎

You obviously shouldn’t do this, but I want you to be able to do this if you need to. ↩︎

I know this user interface is terrible. I’m not saying we should actually be doing this. I’m trying to make a point. ↩︎

Except for non-printable characters, of course, but these were never escaped for the purpose of avoiding collisions. ↩︎

I have chosen the example of spreadsheets here because it’s easier to consider one token per cell than one token per file, even though it’s the same idea in both cases. The difference is only in our mental models and user interfaces. ↩︎

In fact, with text files, you could arrange your tokens in a tree which better reflects the structure of languages. ↩︎

A statement I am sure I will regret with hindsight. ↩︎